BPDUs

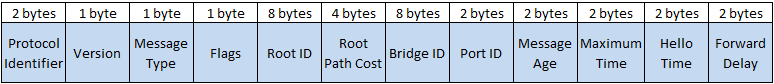

The first thing that STP needs is a way for switches to communicate with each other. This is done by using Bridging Protocol Data Units, or BPDUs. Switches use BPDUs to handle all of the STP communication, such as electing a root switch, communicating the cost to reach the root, topology changes, and timer information. Typically a switch will send out a BPDU every 2 seconds (which is known as the hello time), however this is a configurable value. Let's take a look at what all is in an 802.1d BPDU:

The first two fields, protocol ID and version, would contain the value 0x00 which identifies the frame as an 802.1d type of BPDU. The value of the message type field varies depending on the type of BPDU being sent. If the message type value is 0x00 then it is either a configuration BPDU, which is used in computing the spanning-tree, or it's a topology change notification (TCN). The other possible value for the message type is 0x02 which is used in rapid spanning-tree (discussed later). In the flags field, only the first and last bit are used (the middle 6 bits are always 0). The left-most bit signifies a topology change, while the right-most bit signifies a topology change acknowledgement (TCA). The root ID of course holds the root ID of the root switch and the root path cost field holds the cost to reach that root switch. The bridge ID is the ID of the switch that is actually sending this BPDU. The port ID is used to identify the actual port on the switch from which this BPDU was sent. This value is important because if a switch is receiving its own BPDUs (as might be the case if the switch is plugged into itself via a hub). If that were to happen then the port ID is used to break the tie that would occur in choosing the designated switch (or designated port in this case) since the bridge IDs would be identical.

The message age field is used to help determine when to age out information learned via a BPDU. This value starts out at 0 for BPDUs being created at the root switch. Every subsequent switch who then forwards that BPDU increments the value of the message age field by one. So if you receive a BPDU and the message age is 3, then you know that there are three switches between you and the root switch (on that path anyway). The way a switch uses that value in its age-out calculations is by subtracting the value of the message age from its age-out timer. For example, if a switch is configured to age out spanning-tree info 20 seconds after learning about it from a BPDU and the BPDU has a message age of 3, then the timer starts at 20 - 3 (age-out timer - message age) = 17.

Switches need a way to determine if the root switch is no longer available (maybe it died, maybe a link went down and it's not reachable). The way a switch determines this is by running another time, the maximum time (or maximum age) timer. This is 20 seconds by default in 802.1d and the timer is refreshed each time a new BPDU from the root is received.

The hello time indicates how often hello BPDUs are sent out, while the forward delay field is yet another timer, this one indicating how long a port is to remain in its listening and learning states before moving to a forwarding or blocking state (port states are discussed later in this post).

Electing the Root Switch

When a switch first comes online, it assumes that it is the root switch and sends out BPDUs reflecting that. So imagine again the simple network from above and lets say that this whole network was turned on all at once. In that scenario, each of the 4 switches would send out BPDUs in which they claim to be the root. The way in which the actual root is decided comes down to whoever has the best priority. In STP the lower priority is the better priority. So what happens if all of the switches have the default priority of 32768? Well, fortunately the bridge priority is actually a combination of the configurable priority value and the switch's MAC address, and since MAC addresses are supposed to be unique, there should never be an absolute tie. If switch A's MAC was 1111.1111.1112 and switch B's was 1111.1111.1111 then even though they both have their STP priority set to 32768, B would become root since 1111.1111.1111 < 1111.1111.1112. As soon as a switch receives a BPDU with a bridge priority that is lower than its own, it ceases to claim to be root in its future BPDUs and instead starts sending out BPDUs with the new lower bridge ID as the root. So while switch A in our example might start sending BPDUs like this: [My ID: 1111.1111.1112, Root ID: 1111.1111.1112] as soon as it receives switch B's better BPDU it starts sending out BPDU's that look like this [My ID: 1111.1111.1112, Root ID: 1111.1111.1111]. (Obviously I've omitted fields in the BPDU). Eventually, every switch in the network will have received a BPDU that contains the lowest root ID on the network and at that point the root bridge has been elected.

Building the Tree & Port States

Now that a root for the spanning-tree has been found, we now need to figure out which links to stop using in order to remove possible loops in the network. Switches achieve this by moving some ports from a forwarding state to a blocking state. Once in a blocking state, the switch will no longer forward frames out of that interface, nor will it forward frames received on that interface. But which ports should be blocking and which should be forwarding? To determine this, switches first figure out the best port to use to get back to the root switch. This is done simply by finding the port that has the lowest cost to the root. In 802.1d, the cost of a port is determined by the speed of the link as follows:

| Speed | Cost |

| 4Mbps | 250 |

| 10Mbps | 100 |

| 16Mbps | 62 |

| 100Mbps | 19 |

| 1Gbps | 4 |

| 2Gbps | 3 |

| 10Gbps | 2 |

Let's look at an example network again:

In this example, switch B is again the root switch and we'll take a look at choosing the best port to reach B from switch A's perspective. Switch A is directly connected to B on a 100Mpbs link, which would give that interface a cost of 19. Switch C is also directly connected to B, but on a gigabit link which means that C has a path to the root at a cost of only 4. When switch C sends its BPDU to switch A it advertises that path. Switch A is connected to switch C on a gigabit link as well, so the path to switch C is 4, so if you add that to switch C's advertised cost to reach the root of 4 you get 4 + 4 = 8, which is of course less than 19, the cost of the directly connected port to switch B. This means that Switch A would choose the path through C to reach the root switch and that interface connecting to switch C would be known as the root port (since it's the port the switch uses to get to the root). Each switch will only have one root port, since multiple ports that lead to the root would be in itself a loop, thus defeating the purpose of STP.

Now that each switch has figured out the best path to the root switch, it then needs to figure out who gets to have the designated port on each LAN segment. To make this example easier to understand, let's add another switch to our network:

Both switch A and D are going to send out hello BPDUs onto the LAN segment that connects them to each other. What needs to be decided is which switch will have a designated port on that segment and which will be blocking. This is done by choosing the switch that has the lower cost to reach to root in their BPDUs, and since switch A has a cost the root of 8 and switch D has a cost of 19, switch A gets to have the designated port on that segment and switch D moves its port to a blocking state.

Blocking and forwarding are only two of the four possible states for a port when running 802.1d. When a topology change occurs, such as a link going down or a new link coming up, ports first enter a state called listening. When a port is listening, it is processing BPDUs but it is not forwarding either ingress or egress traffic. If STP decides that this port should be moved to the forwarding state, it must first be moved to the learning state. In this state the port is still not forwarding frames, but it is learning about source MAC addresses on its segment and populating the MAC address table.

Convergence Time & Vlans

One of the most important issues with STP is how long it takes to notice and respond to a change in topology. Unfortunately, 802.1d isn't exactly fast at at reacting to a change, especially by today's standards. For example, if a link on the current path to the root switch were to go down then a switch may wait for the maximum timer to expire, which is 20 seconds be default, before considering an alternate path. Then, the listening and learning states each take 15 seconds, so that's 50 seconds before the switch starts forwarding on an alternate path, which is a pretty poor response time. This is greatly improved in the next IEEE version of STP, 802.1w which we'll go over in an upcoming post.

However, convergence time is not the only obvious problem with 802.1d. One thing that the IEEE failed to consider in both 802.1d and 802.1w is vlan trunking. In both versions, the spanning-tree rules apply to the entire port, not matter whether or not a loop is only present in a single vlan. Cisco, on the other hand, did take vlans into consideration with their implementation of STP known as Per-Vlan Spanning-Tree (PVST) which will be the topic of the next post.